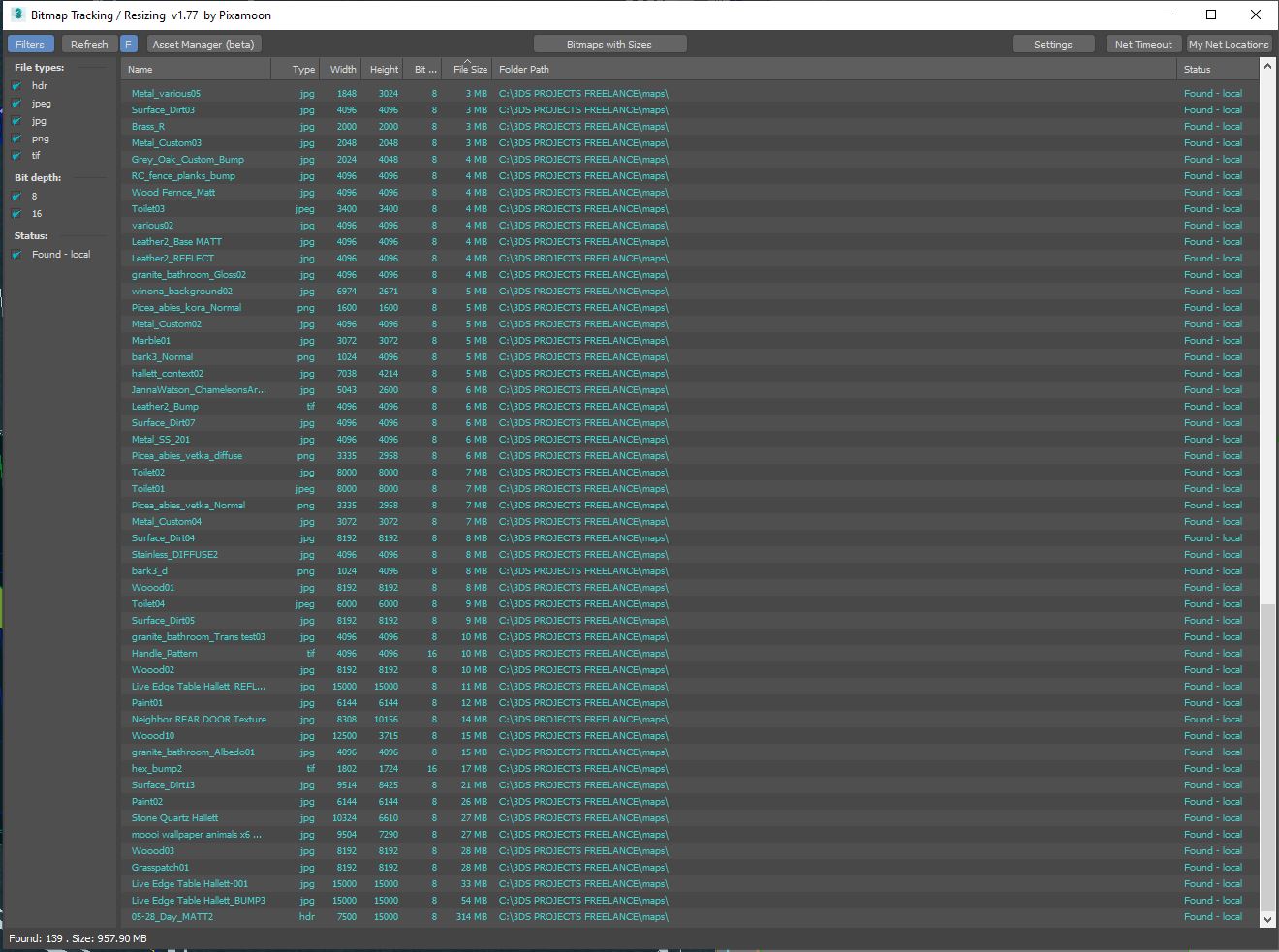

Every element must be an exact reproduction so it would match the photography. I made measurement notes combined with the reference images starting with the kitchen appliances. Many manufacturers offer free 3D files of their products directly on their websites. 3D Warehouse is another good site for quick free models. Free models often need refinement and detail, but it’s a good starting point.

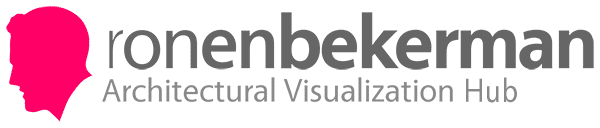

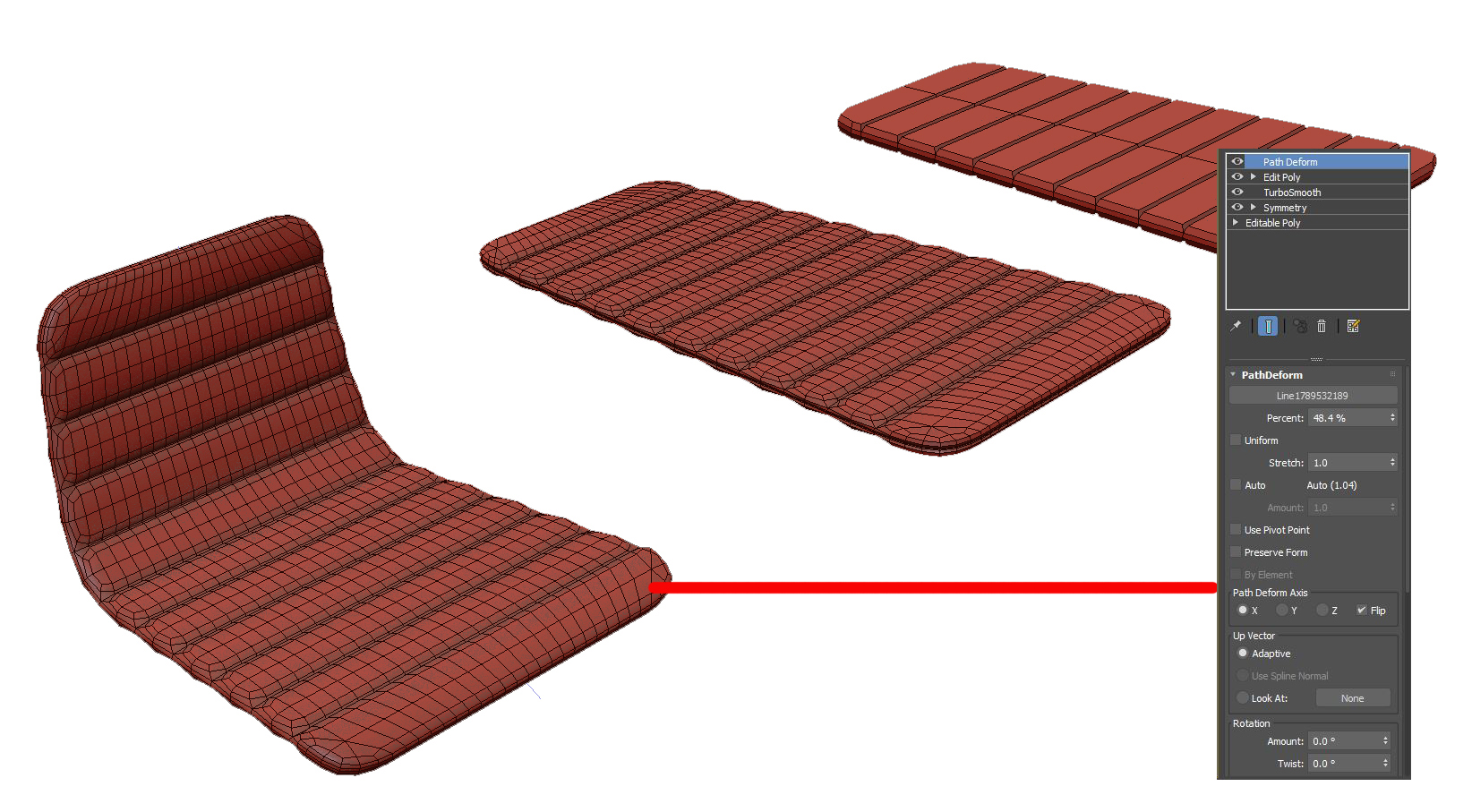

To create the stool, I imagined it as a flat object bent to form the back and seat. I crudely shaped folds and added thickness starting with simple polygons, knowing it would be smoothed with a turbo smooth modifier. We then bend that mesh using a path deform modifier, creating the final shape. Stitches are made from a generated spline of the final mesh and use the MCG Stitches script.

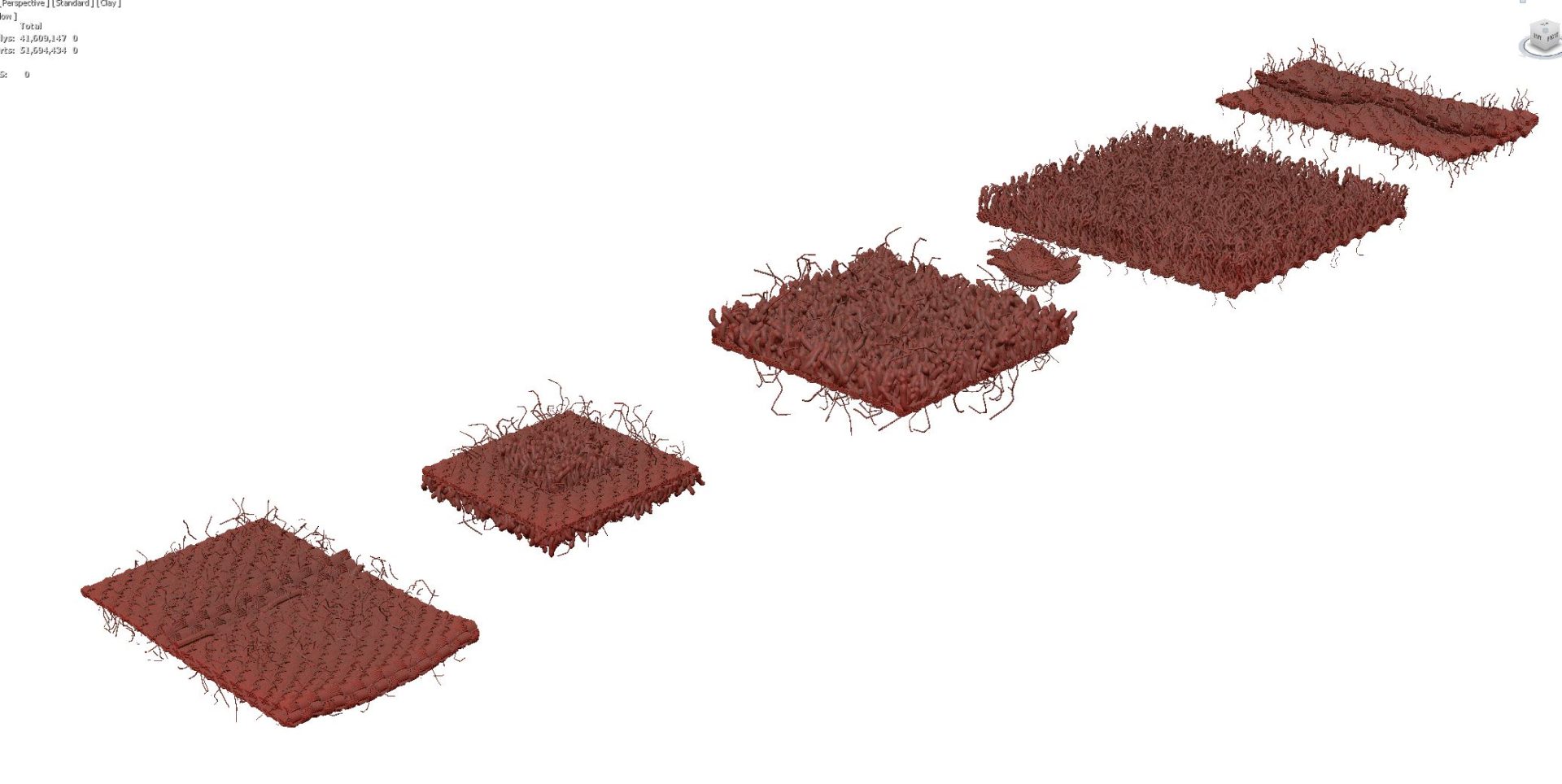

The cloth has been simulated with Tyflow. The fine cloth details use a mesh I created to match the towels. It’s a repeating pattern that is scattered using the Fstorm Geo pattern. I love Geo patterns. Its texture mapping, but in 3D. The skull towel was simulated with a simplified proxy plane. The skull towel would break because it contained irregular triangles, so it referenced using the Skin Wrap modifier directly to the Tyflow object.